Truck of tomorrow - Professional HMI

We foresee the merge of self-employed truck drivers in 2030 when data computing would be powerful enough for an efficient outsourced logistic system. With the support of automation technologies, truck drivers can deal with logistics tasks even on the road. The final solution includes a heads-up display (HUD) with the support of audio feedback and tactile dashboard to provide scheduling information for freight transportation.

Skill: Video prototype | Wireframe | Sound design

Partner: Scania | Swedish ICT

Duration: 6 weeks

Time: 2013 fall

With: Júlia Nacsa | Regimantas Vegele

Video prototype

Video prototype scenario

We decide to focus only on the logistics part of the scenario because we consider this logistics perspective as our main contribution to the future of trucking.

Our scenario is extended with the transitions between automated (Logistics) and manual (Drive) mode.

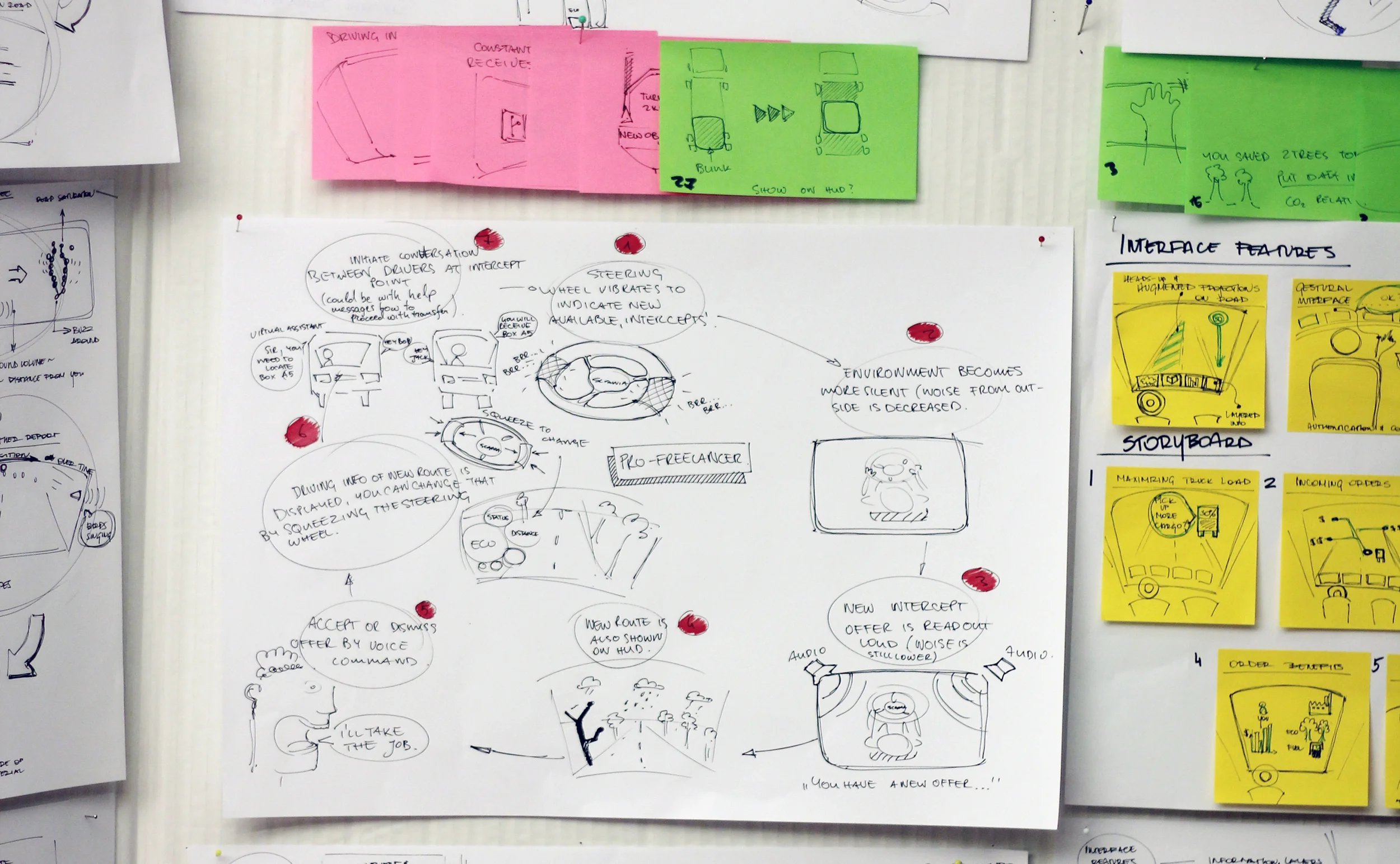

Modalities of interface

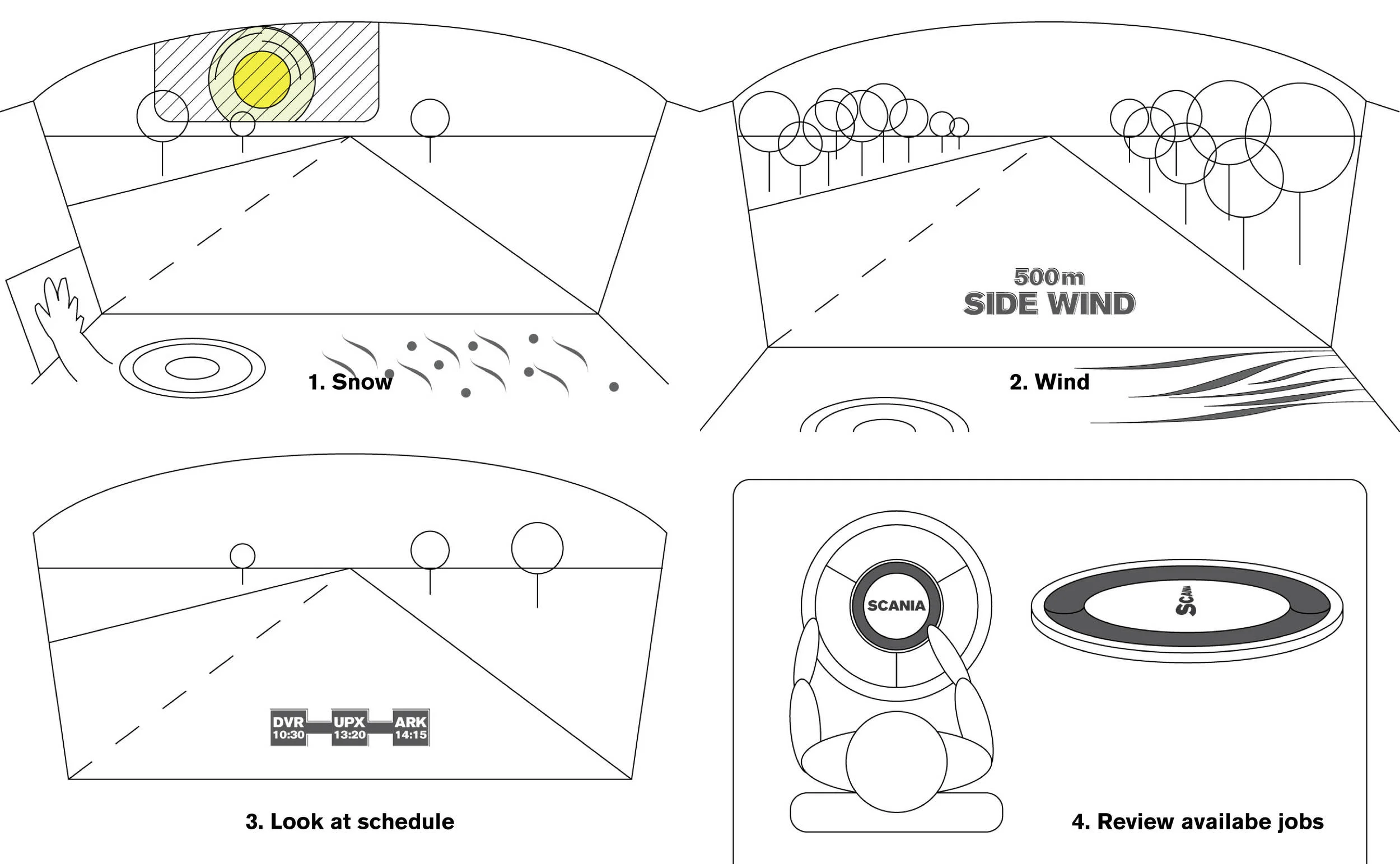

The interface uses three modalities: visual, audio and tactile.

It features visual modality as the main source of information; audio modality as a reinforcing and attention raising element; and tactile modality as an input device and as a peripheral source of additional information.

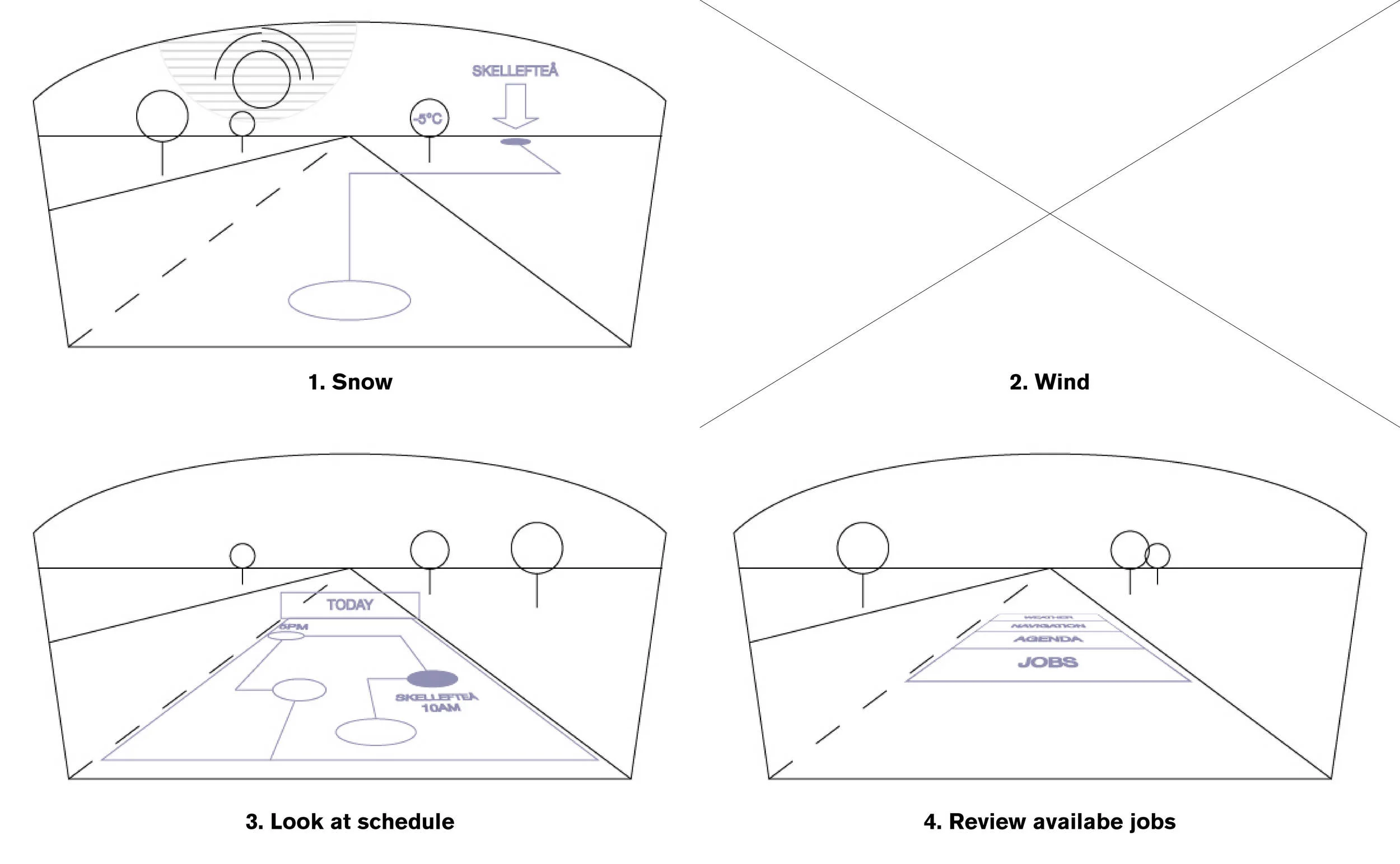

Heads-up display signature interface patterns

Job offer #1

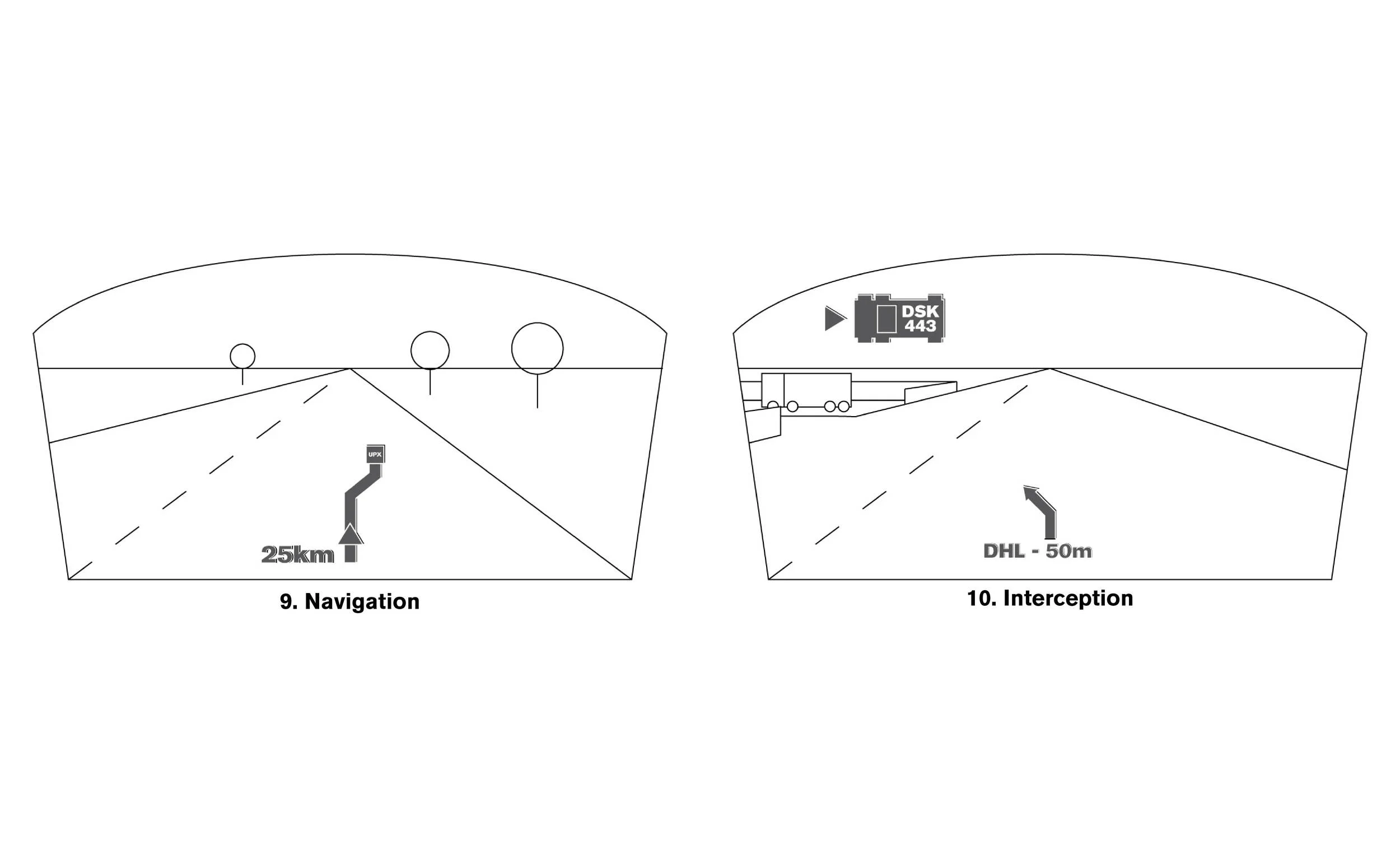

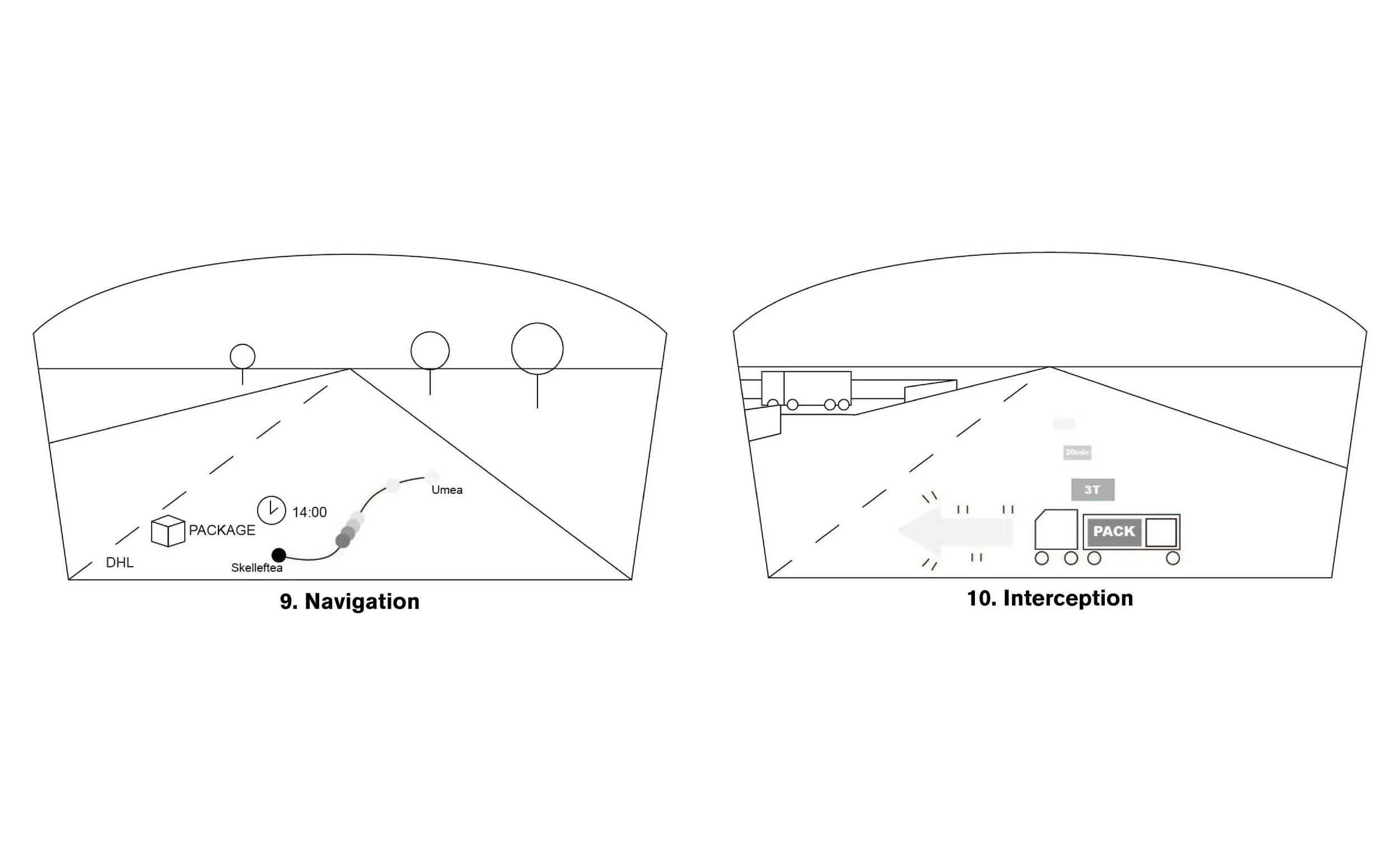

All the crucial information about scheduling, destinations and navigation are displayed explicitly on the HUD. The graphics are designed with different features in Drive and Logistics mode. Job date, destinations, income, distance and time are visible, as well as a GPS map representation.

The tactile patterned surface at the edge of the windscreen (for weather) and on the dashboard (for road condition).

Job offer #2

The dynamic change of this patterned surface can be electromechanically controlled with various methods (e.g. lifting up/down separate surface segments; using smart materials that are reactive to electric current, such as shape memory alloys, conductive plastics etc.). The design intention behind including such an informative surface was to relieve the HUD from information overload, without excluding important, even though not crucial, information from the driver.

1. Switch to Logistic Mode

2. Task review

3. Receive task confirmation

4. Receive task decline

Physical control: gesture patterns

1. Hold to switch mode

2. Spin to browse tasks

3. Tap to apply for a task

Sound design with Node beat: audio feedback

Audio is used to raise attention when the driver is not looking through the windscreen in Logistics mode. When in Drive mode, it is used to complement and reinforce the visuals events such as input feedback, notifications and warnings.

Two harmonic segmentations are introduced: a fundamental baseline and a dominant melody, are used in each sample.

Two different earcons are implemented in the system to represent different interest levels of incoming offers, which help the driver to classify information faster and more accurate in driving mode.

Persona

Our persona - Petra, 35, used to work for a large logistics and transportation company. She decided to start freelancing so she could have more flexibility and freedom in planning her own schedule. Petra noticed that the Umeå region had a fast growing industrial district with many transportation-intensive sectors.

Future Logistic Scenario

In our logistic scenario we present a collaboration between two industries. A rigid one, functioning within large transport infrastructure, and a flexible one, functioning within a local community based industrial district. Our persona is working between the local community and the large industry. She is freelancing jobs that the large industry decides to outsource, as well as jobs that the industrial district offers.

Level of automation

We bounce between extremes in automation level: manual and fully automated driving. We envision that by 2030 there will be a fully automated driving system. System decisions will be based on the information interpreted from data about road condition, weather forecast, traffic condition etc. If the system deems the conditions unsafe for automated driving, it will notify the driver to take over control. If the driver doesn’t reply to the notification, the truck will stop or slow itself down.

Thus, we define two modes in the automation system of the truck: Drive mode and Logistics mode. In Drive mode, the driver operates the vehicle, and can switch to automated mode any time the system deems it safe (i.e. Logistics mode is available). In Logistics mode, driving is fully automated, and the driver can manage logistics tasks such as scheduling or applying for jobs offers.

Information and data flow

There are several providers of this information in our concept. Data can be either local, or received from external sources. The truck system helps the driver manage the information flow by filtering it according to the driver’s preferences, so the driver only sees the information relevant to his decision making.

Personal reflection

HMI is a huge on-going discussion in the industry. It's nearly impossible to tackle the level of complexity just within the discipline of interaction design. It is a huge system including of interaction design, industrial design, ergonomics, machine learning, data science and a lot more disciplines that we might even never thought of. Besides, the biased automation system and data calculation is usually oversimplified in design scenarios. As a student project, it was nice to try out and understood the level of complexity and constrains in HMI. The solution is more inspirational than practical.

Design learnings

- To tackle with complicated interaction and information using different modalities to reduce cognitive load: visual modality as the main source of information; audio modality as a reinforcing and attention raising element; and tactile modality as a peripheral source of additional information.

- Push each modalities to extremes in order to see potential

- To predict and build on future scenario, secondary research can strengthen the grounding of the concept besides primary research

Things I would have done differently

Get feedback from truck drivers about the future scenario

Test out what information is relevant for truck drivers to decide taking up a job, and how important it is

Explore graphic interface design and information visualisation of HUD to reduce the text-heavy wireframe interface. Secondary research on HUD design principles i.e. scale, colour etc.

My contribution

As a group, we went through user interview, secondary research and ideation together and did several swap round in ideation. I took the lead in the final modality building on sound design and gave feedback on other modalities.

The challenge: predict the future trends in freight transportation and transform into a corresponsive design solution in truck environment.

1. How to predict a most possible future of freight transportation in 2030?

Based on the interview we made with truck drivers and the secondary research in future logistic megatrends such as growing infrastructure congestion; increasing complexity, increased outsourcing; digitization; e-substitution etc. How can we synthesise all these research information into design insights and concepts in the future?

2. How to implement multiple modalities in truck environment to reduce the cognitive load of truck driver?

There are three modalities we defined in our future scenario: visual, audio and tactile. How to address information of different detail and importance into these three modalities within a coherent interface?

3. How to introduce an ideal level of automation in truck driving?

The human-machine interaction in vehicle industry may involve different automation levels, depending on the means of transportation. The aviation industry already adopted a very high level of automation decades ago. Three major roles can be divided between the driver and the system: the moderator, generator, and decider. These roles can shift into discussion for our design concept.

The process: Primary research interview with truck drivers - Secondary research in automation and future logistic trends - Synthesis research insights - Ideation to push modalities into extremes - Modality synthesis - User test - Deliverable

Truck driver interview

User insight cards

Primary research: Field research with truck drivers

We conducted interviews and observations with three truck drivers. They were not talking about how difficult driving is, or how automation affects them. They rather gave voice to their concerns with security(whether they still have a job in the near future), freedom and future of their careers. These insights lead us to look at the future of logistics and freight transport as a whole, instead of focusing on the activity of driving as it is today.

Secondary research: Future trends in logistics

We found a model that resembles these predictions as close as possible - a model of a distributed economy - so that we can develop a future scenario for our truck driver to operate in.

A distributed economy scenario deals with relations that are “more complex than those in a centralised economy". A big advantage of this is that it enables entities within the network to work much more with regional/local natural resources, finances, human capital, knowledge, technology, and so on.

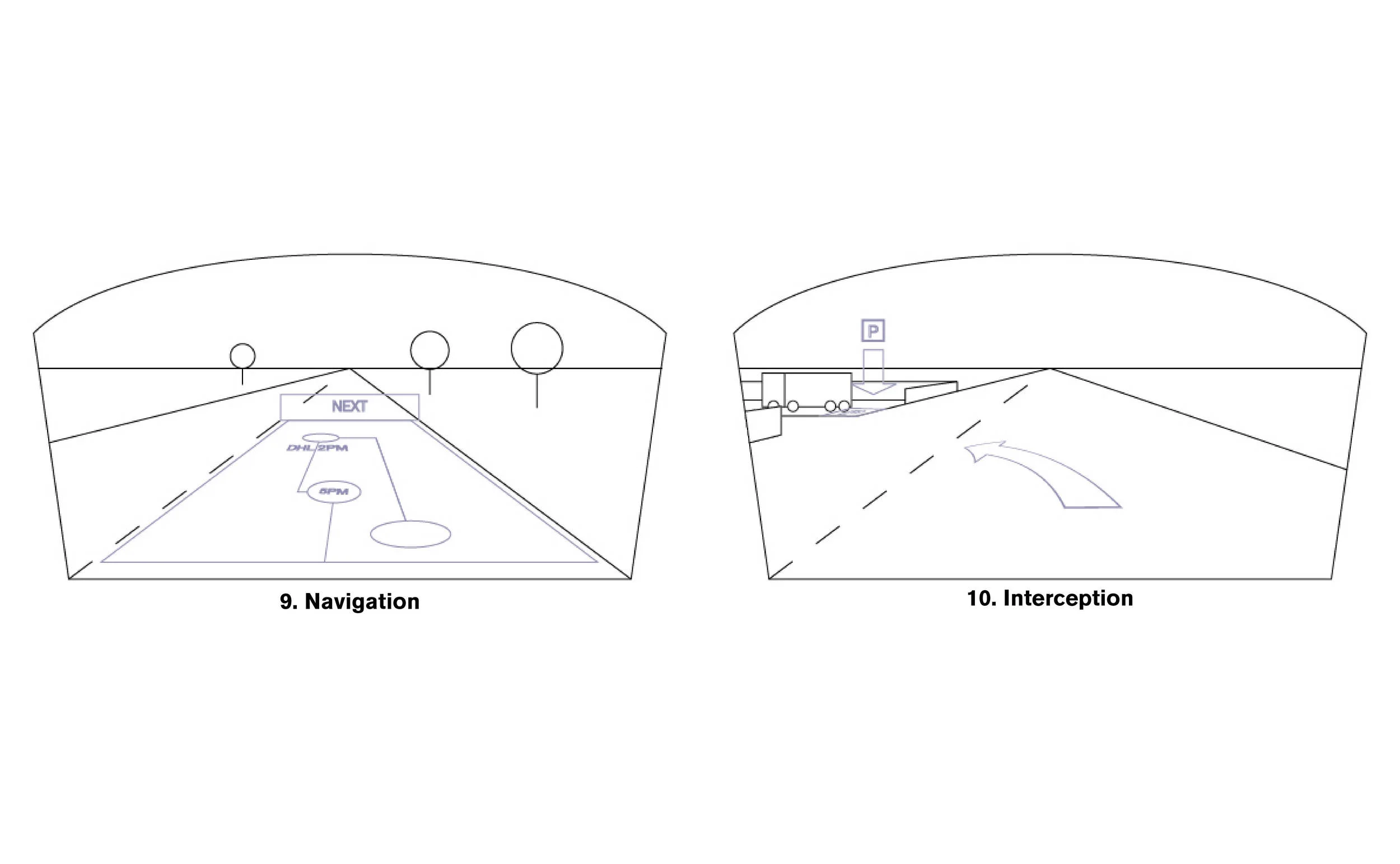

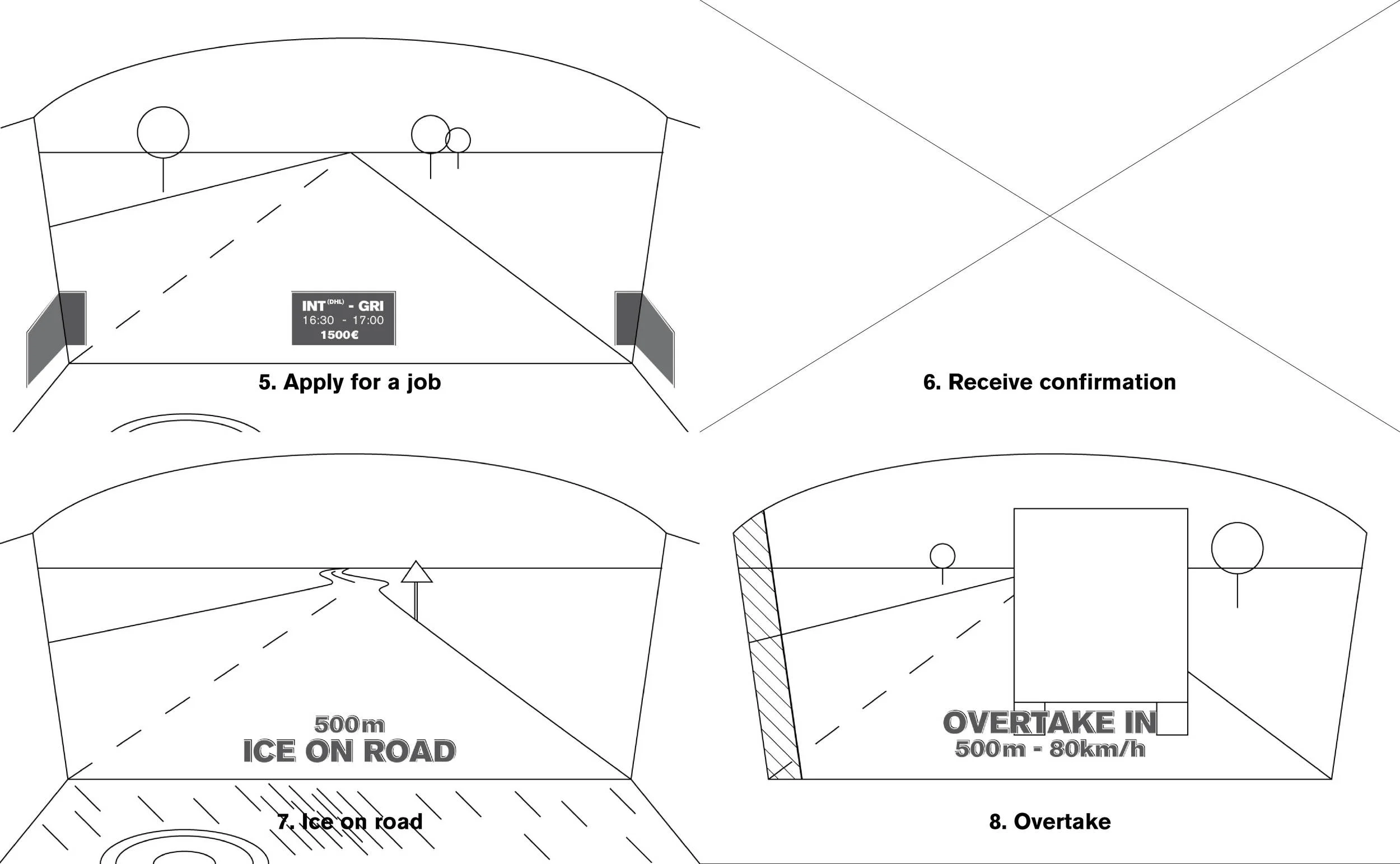

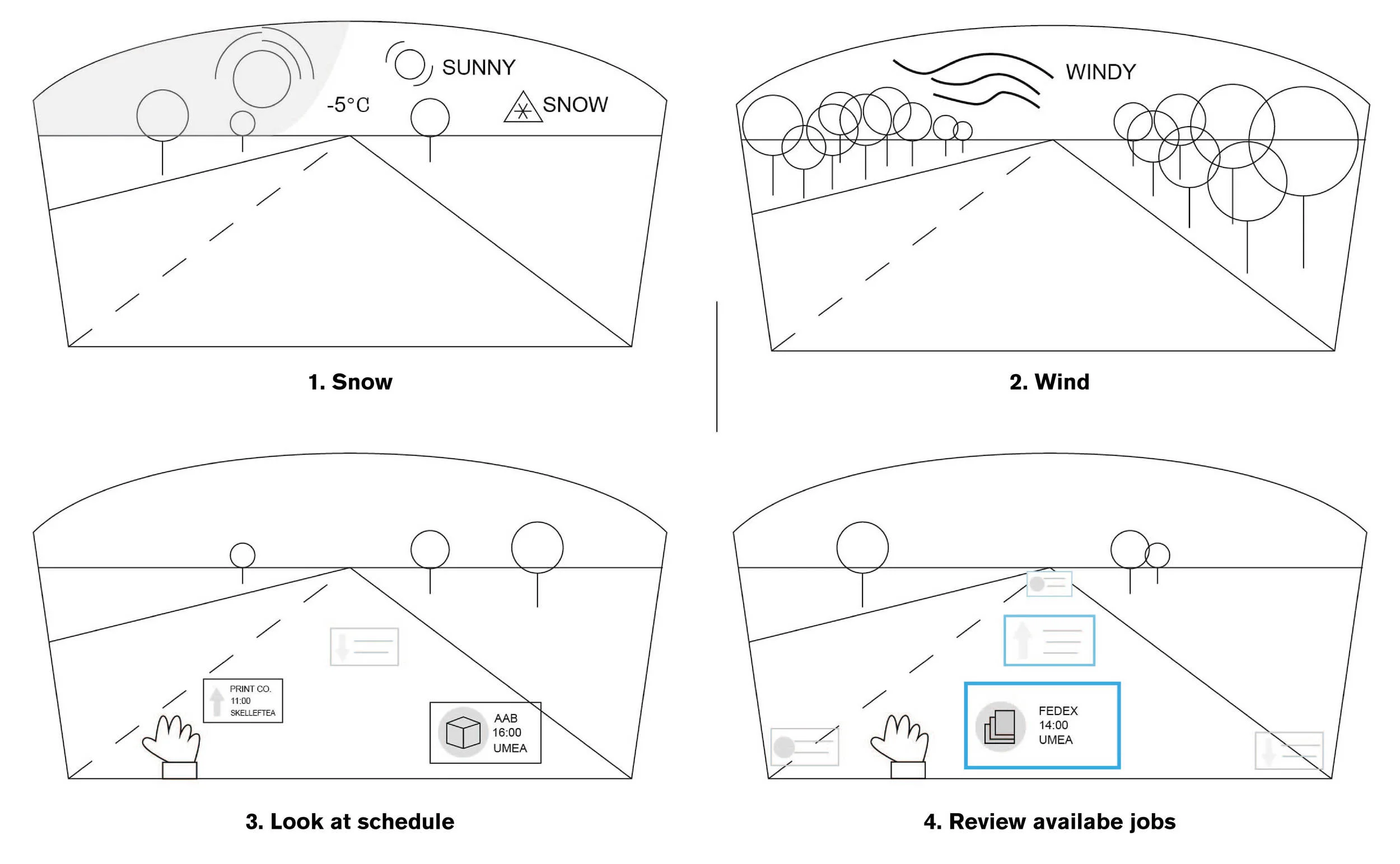

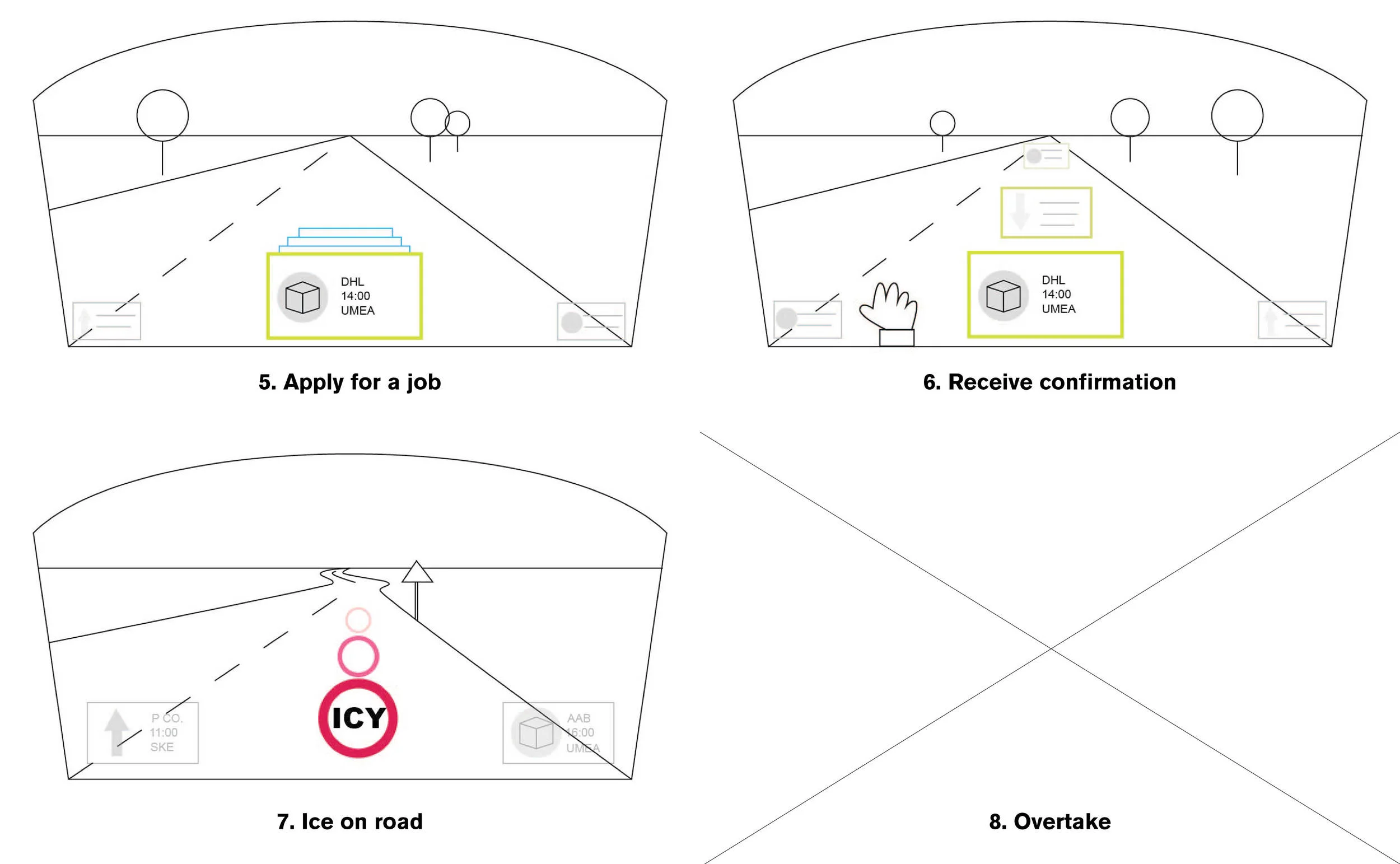

Steps of logistic scenario

Interaction flow chart of logistic scenario

Build future logistic scenario & driver's tasks

By combining the research, such as flexibility and real-time routing, with the main values of the driver, we came to a conclusion to design for a freelance truck driver, which is existing and popular in other countries.

We specified ten steps that the driver would go through in the above-mentioned logistics scenario. In this, the driver fulfills driving and logistics tasks as well. During these steps the driver would be able to switch between the Logistics mode and the Drive mode.

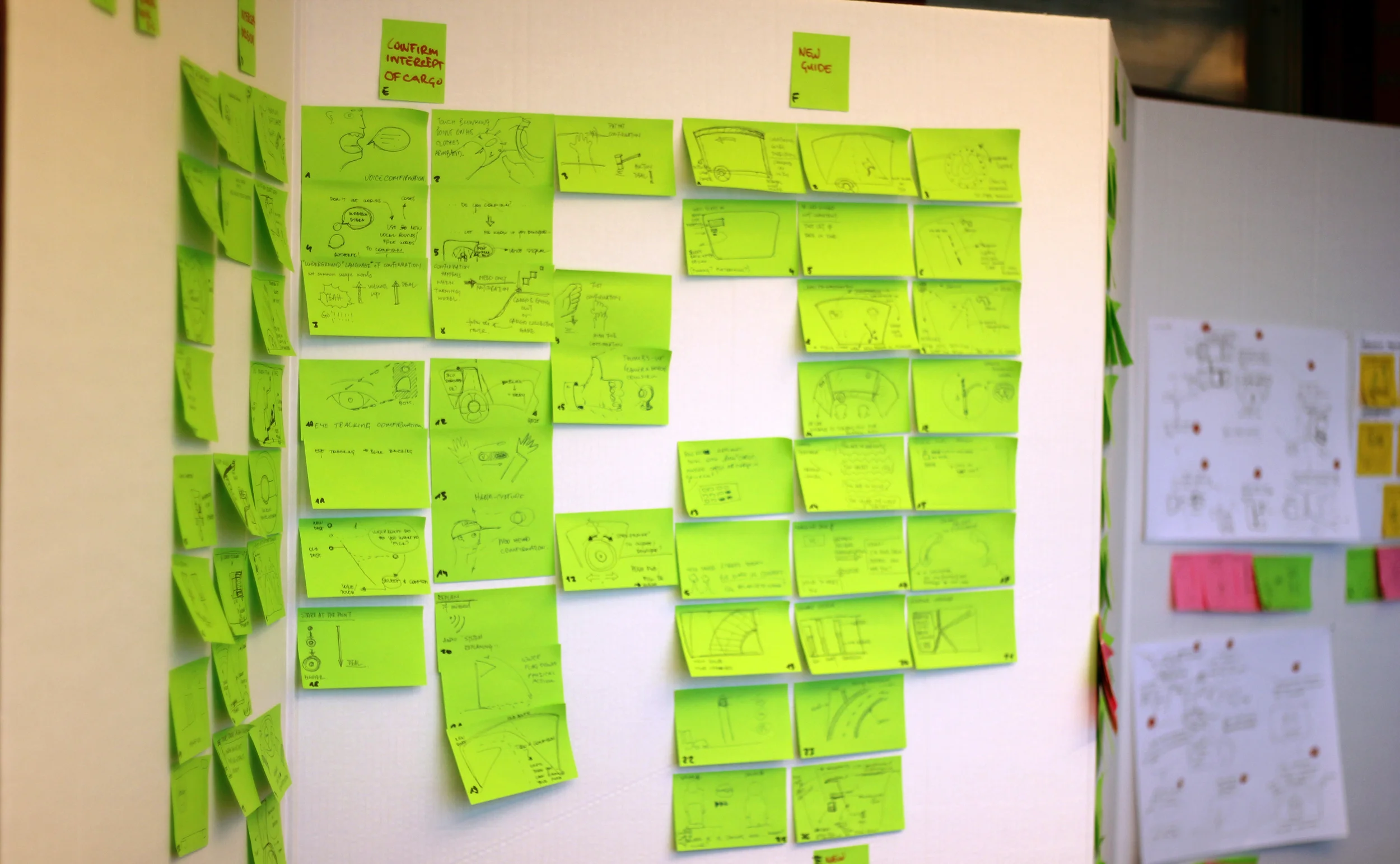

Ideation

Based on the future scenario, we did a brainstorming session to generate different concepts that fit the defined driver’s tasks. We summarized three modalities for further concept development: heads-up display (HUD) visual interface in 2D; in 3D; and a haptic interface.

We tried to fully explore the potential of each modality by pushing them to the extremes, which means that we displayed all information across all steps of the scenario with one modality at a time.

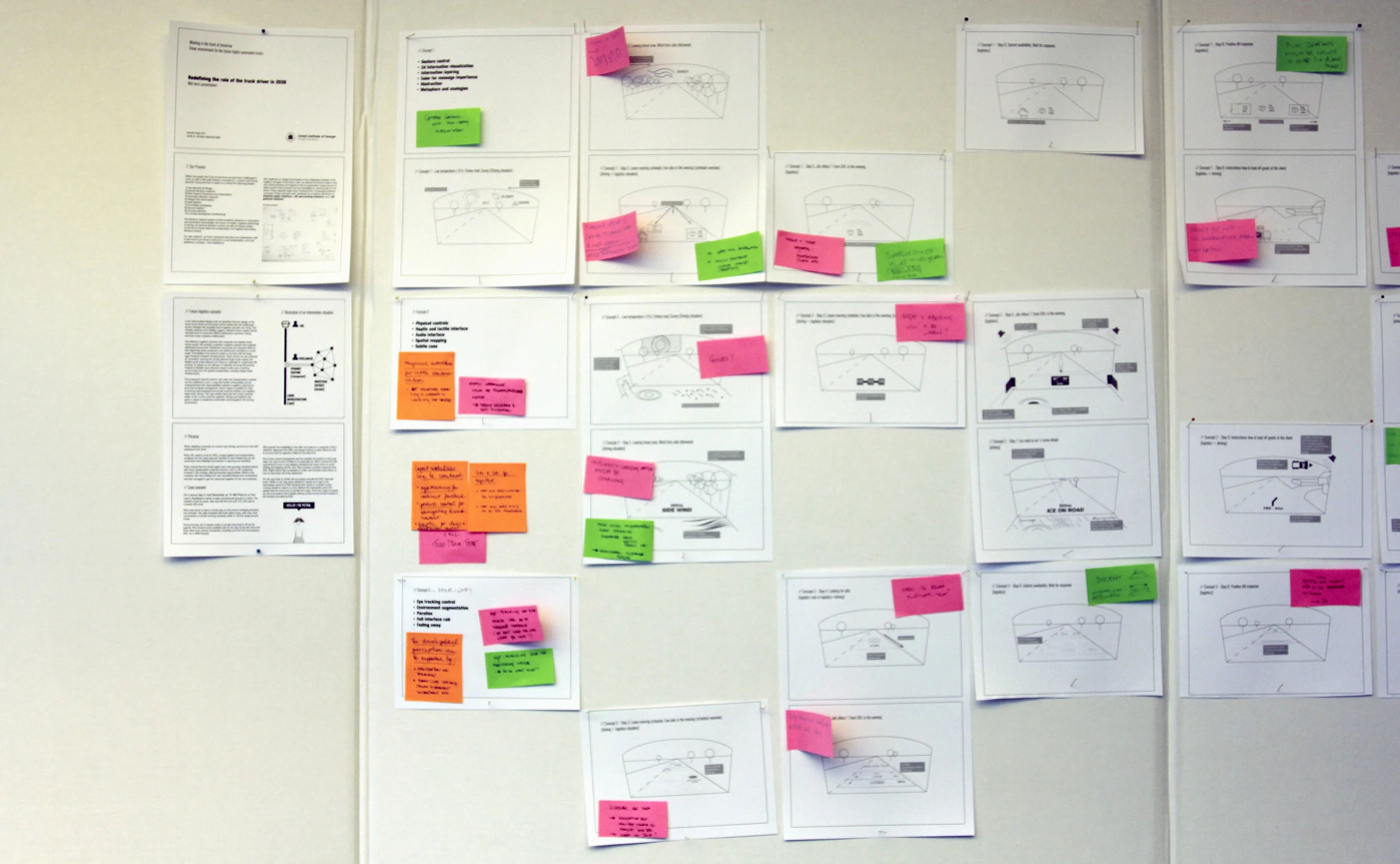

Mid-term concepts

After designing with these modalities, we selected the most appropriate of them for each information. This was helped by the feedback during the mid-review.

We filled the heads-up display with a combination of 2D and 3D visualizations; the audio modality was used for supporting visualizations and for reinforcing notifications.

The haptic modality was further explored as a display of meta information (information that would be second priority in decision making). Thus, we prototyped three new modalities: visual, audio and haptic.

Our approach was to explore whether a certain modality can stand on its own. Each modality was addressed with the most relevant three scenario steps that included driving tasks, logistics tasks, driving and logistics tasks together.